Telecom Security – Part 10 of 10 in the series.

AI Account Phishing

AI platforms have become essential work tools, handling everything from documents and analysis to code, prototypes, and sensitive conversations. Yet the accounts behind these platforms remain surprisingly unprotected. Attackers have already noticed. In the past two years, stolen credentials for ChatGPT and other AI services have appeared across dark-web markets in volumes nobody expected.

Group-IB found over 225,000 compromised ChatGPT accounts traded between Jan and Oct 2023.

Kaspersky reported a sharp rise in AI-service credential leaks in 2023,

with about 664,000 OpenAI-related records exposed – a 33-fold increase in just one year.

And by 2025, the scale became staggering,

with reports claiming nearly 20 million OpenAI logins circulating on dark-web markets.

These numbers confirm a simple truth. AI Account Phishing is already mainstream.

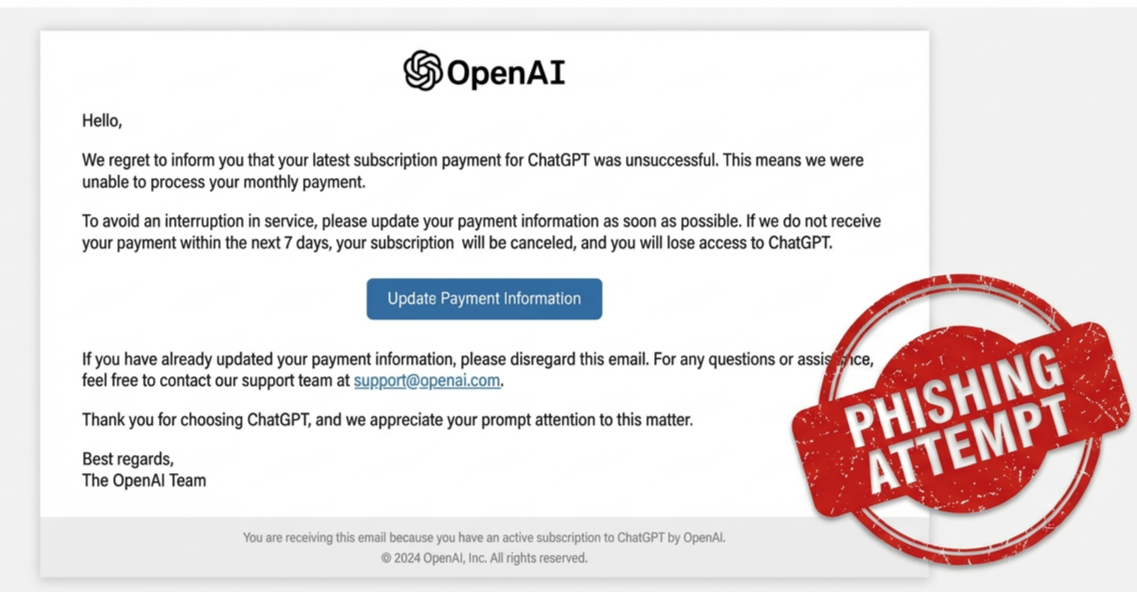

Attackers are combining this stolen-credential pipeline with convincing phishing. . They impersonate AI platforms, replicate login flows, clone branding, and deliver carefully crafted links across email, messaging apps, SMS, and even search ads. A 2024 campaign documented by Barracuda used fake OpenAI billing notifications with realistic domains, varied URL paths, and valid TLS certificates

The goal is simple. Get users to click a link and hand over their AI login or API key.

How AI Account Phishing Works, and Why It’s Growing So Fast

The attack always starts the same way, with a message claiming something urgent. Payment failed. API key unsafe. Access paused. A new model is waiting. Each lure prompts the user to click on a link that appears legitimate, often shortened or disguised behind redirects.

The landing pages are nearly indistinguishable from real AI login portals. Domains differ by a character or use trusted-looking TLDs. Many pages have valid HTTPS and mimic the exact flow, design, and CSS of official platforms. Once credentials or API keys are entered, attackers harvest stored files, chat history, documents, and code fragments. They run expensive workloads or bundle the stolen account into a marketplace “log.”

Three structural forces are accelerating this trend.

AI accounts now hold high-value data – prompts, documents, project context, and API keys.

Users trust the channels these platforms use – so fake alerts feel naturally credible.

Users do not treat AI accounts like mission-critical assets – many people access AI tools on personal devices, where infostealer malware spreads easily. Research shows families like LummaC2, Raccoon, and RedLine are a major source of leaked AI credentials

Phishing and malware feed each other. Malware steals existing accounts. Phishing steals fresh ones. Both circulate rapidly across dark-web markets

Stopping the Attack Before the Login Page Loads

Most AI Account Phishing depends on one thing. A link.

Whether delivered by email, chat, SMS, or a search ad, the attack requires the victim to click a URL. That URL is the earliest and most reliable point to break the attack.

Modern detection focuses on how the domain behaves. Most phishing pages rely on newly registered lookalike domains, AI-themed TLDs, redirect chains hiding the final page, obfuscated parameters, shorteners like bit.ly or t.ly, or fast-rotating hosts with real TLS certificates.

This is where Fortress URL Scanner DB comes in.

Built for high-volume, real-time link inspection, it analyzes domains, redirects, and obfuscated URLs to identify dangerous behavior associated with AI Account Phishing. It catches lookalike AI login pages, domains impersonating AI brands, and malicious hosting patterns that change rapidly. It works across messaging channels, notifications, internal systems, and automated workflows.

Fortress URL Scanner DB also maintains a continuously updated risk model for AI-related threat patterns, including:

- domains containing brand-adjacent keywords

- redirect behavior typical of AI-phishing kits

- short-lived hosting and rapid domain churn

- cross-channel delivery patterns common in AI-account lures

The aim is clear – Stop the attack before users ever land on a fake login screen, even when the phishing infrastructure is brand new, short-lived, or built to look legitimate.

How to Protect against AI Account Phishing

A few practical habits make a significant difference.

Never log in through links in emails, messages, or ads.

Always check the exact domain in the address bar.

Treat AI accounts like any SaaS platform that stores sensitive data.

Rotate API keys regularly and avoid using the same key for multiple projects.

Store minimal sensitive data in chat history or uploads.

Block suspicious links before they reach users.

Use systems that detect brand impersonation domains and aggressive redirect behavior.

And if checking every link feels exhausting,

Fortress URL Scanner DB is happy to lose sleep so you don’t have to.

Conclusion

AI platforms have quietly become part of everyday professional workflows. That makes their accounts part of the modern security perimeter. Attackers target these accounts because they expose data, cost money, and unlock valuable API capabilities. Since almost every attack begins with a link, the most effective defense is stopping that link before the page loads.

Fortress URL Scanner DB intercepts these threats at their earliest point, helping neutralize AI Account Phishing before credentials or API keys can be taken.

Want to protect your subscribers from link-based fraud across every channel?

See how Fortress URL Scanner stops phishing links before the user even sees them!